AI-powered tools like ChatGPT, Google Gemini, Microsoft Copilot, and DeepSeek are transforming how businesses operate. From generating content and writing e-mails to creating reports and organizing tasks, these chatbots can be powerful productivity boosters.

AI-powered tools like ChatGPT, Google Gemini, Microsoft Copilot, and DeepSeek are transforming how businesses operate. From generating content and writing e-mails to creating reports and organizing tasks, these chatbots can be powerful productivity boosters.

But as these tools become more embedded in daily workflows, a critical question arises for business owners: What happens to the data you share with these platforms — and how secure is it?

Your Data Is Being Collected — Here’s How

Most AI chatbots operate in the cloud and rely on your input to improve performance. But that input isn’t always kept private. Whether you’re drafting internal communications or asking for sensitive business advice, here’s how your data could be at risk:

ChatGPT (OpenAI)

-

Collects: Your prompts, device type, IP address, and general location.

-

Shares data with third-party vendors.

-

Stores interactions to improve future responses — unless you disable chat history.

Microsoft Copilot

-

Gathers data across Microsoft 365 apps.

-

Tracks browsing history and in-app behavior.

-

May use your data for AI training and even targeted advertising.

Google Gemini

-

Retains chat data for up to three years.

-

Human reviewers may access chats to improve services.

-

Claims not to use data for ads — but policies can change.

DeepSeek

-

Tracks everything from prompts and device info to typing patterns.

-

Stores data on servers located in China.

-

Data is used for AI training and advertising insights — often without explicit opt-in.

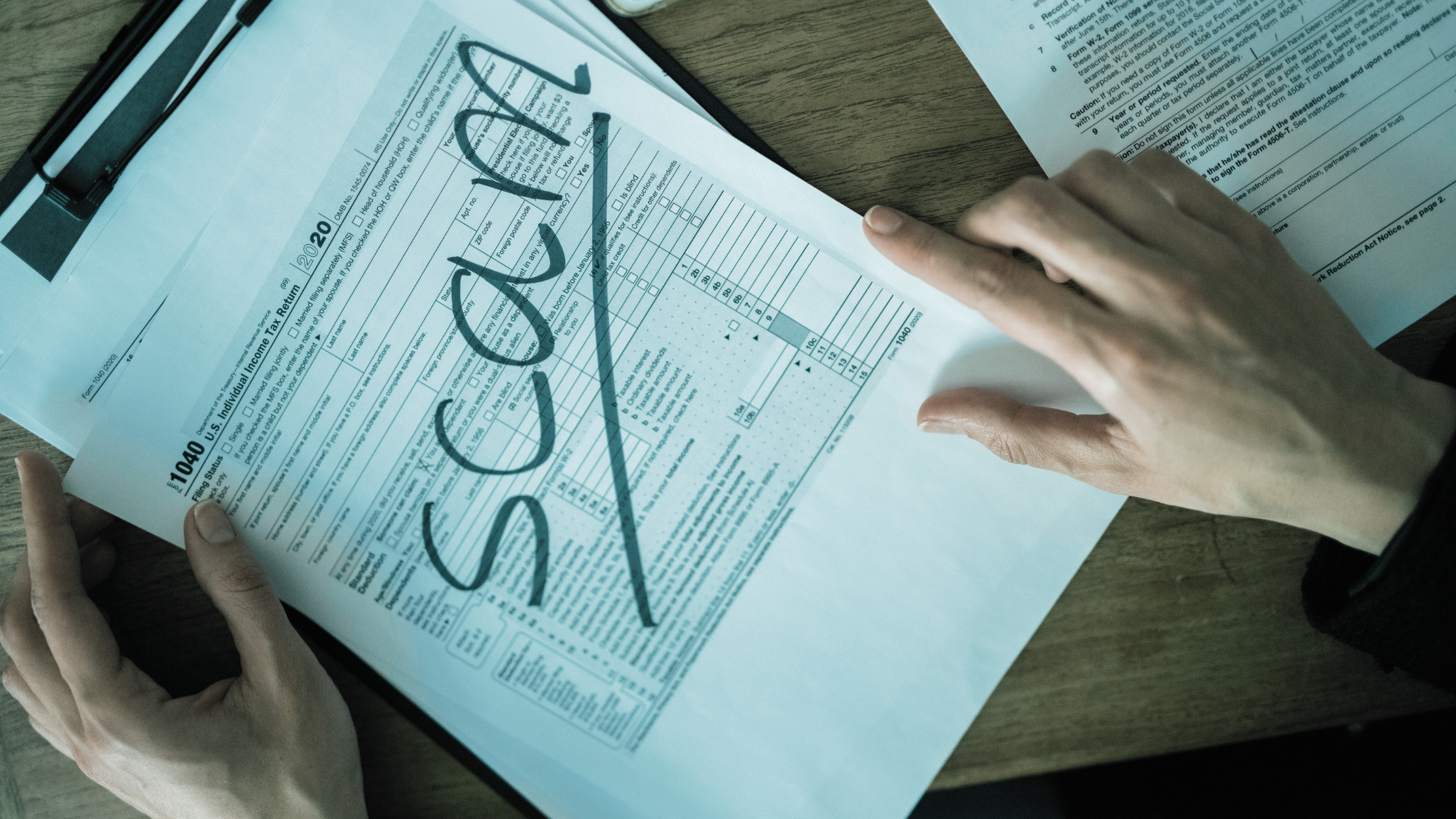

What This Means for Your Business

If your team is using these tools to brainstorm, create client-facing documents, or manage internal workflows, sensitive data could be at risk.

Here are a few of the top risks to be aware of:

-

Data Privacy Violations: Some platforms may allow third-party access or store data without clear consent, putting your business at odds with privacy laws.

-

Cybersecurity Threats: Vulnerabilities in tools like Copilot have been linked to spear-phishing and data leaks.

-

Compliance Issues: If you’re in a regulated industry like healthcare, finance, or law, chatbot data handling could violate GDPR, HIPAA, or other standards.

How To Use Chatbots Safely in Your Business

Don’t Share Confidential Data – Treat chatbots like public forums unless you’re sure of their security.

Review Each Platform’s Privacy Settings – Some offer opt-out options for data storage or AI training.

Use Governance Tools – Platforms like Microsoft Purview can help your IT team enforce data controls and limit access.

Stay Informed – Keep up with policy changes and emerging risks tied to AI use.

Secure Your Business Before It’s Too Late

AI tools are here to stay — but so are the risks that come with them. The good news? You don’t have to face them alone.

At Iler Networking & Computing, we help businesses like yours stay protected while taking advantage of the latest technology. Start with a FREE Network Assessment to uncover hidden vulnerabilities and get expert guidance on how to secure your systems and data.

Click here to schedule your FREE Network Assessment